As the world moves into the "cloud", telephony has made its way there. There are several vendors selling hosted Voip solutions to customers; while others are saying buy your own gear keep it on site and you control your own destiny. I've had the opportunity to work both, and while both have there Pro's and Con's companies need to understand what they are getting into and how to think about there future needs. IP telephony is not a one size fits all service and you have to closely think about the what you will be paying for.

Hosted VOIP Pro's

You can get a feature rich system, and all you pay for is the telephones, switches and a monthly fee for services you use. Some of the providers offering messaging to email, contact center, video, faxing services. You can get large corporation features in your small business that can give you an edge. You won't need an on site telephony person to take care of your needs, and you can even provision your own phones to add new users.

Your own equipment pro's:

You own your own gear. This means you are free to find the cheapest service you can get from a provider. Also if you don't like the service contract you purchased, you can go find someone else. All you need to do is change passwords and cut access. If you have an on site Engineer, you can get changes done quickly without putting in tickets to a service desk. You can work on crazy customizations without having to pay a fee from your provider.

Hosted VOIP Cons:

You are at the mercy of the provider. They own the call processing component. If you want a feature they don't offer you are out of luck. Engineers you work with can vary in skill. If they have a outage in the data center you are stuck like chuck. One I worked with had a broadcast storm. Calls for 250 clients didn't go to the PSTN. The Hosted call center went down. We also had several voicemail outages during my time there. If the company goes out of business guess what? Who are you going to be getting your call processing from. Costs can sneak up on you. A company with high turnover MACs can add up quickly. Companies with expected high turn over like call centers should be very careful when choosing a hosted solution.

On Site equipment Cons:

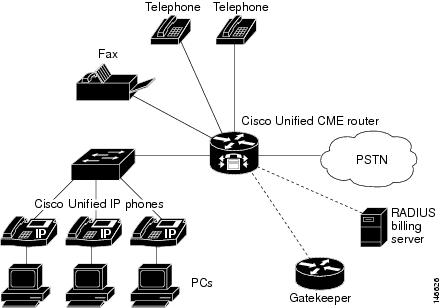

Cost, Cost, Cost. There are huge upfront costs associated with getting your own telephony on site. Cisco, Avaya, Nortel, can be pricey. You will have to pay for installation, and depending on the size of your company you will have to pay for on site employees, or you have to contract it out to a provider. What I have also seen is that some other network folks inherit it, and they just want to stay as far away from the phones as they can. Something we take for granted like phones are a nightmare to people who keep the VOIP packets flowing.

At the end of the day you have to decide what your company can afford and your needs. I like both solutions, and I've seen both of them fail when IT managers didn't ask the right questions about what they were purchasing and leverage there needs against them.